AI Explainability/Interpretability Continues to Advance

The notion of explainable/interpretable AI, i.e. platforms transparent enough so that a human expert can identify how decisions are made, will gain relevance in 2020. According to a 2018 report from Accenture, an increase in the level of AI transparency empowers humans to take the necessary corrective actions based on the explanations provided. This trend was strong in 2019 and by all indications, 2020 will see even more focus.

“Within three years, we believe [explainable AI] will have come to dominate the AI landscape for businesses — because it will enable people to understand and act responsibly, as well as creating effective teaming between human and machines,” commented Accenture in their report.

The emphasis on transparency is driven in part by provisions in new legislation like GDPR that require companies to offer consumers the right to ask how AI tools make decisions about them. Maybe even more importantly, the public’s perception of how AI is affecting their lives is driving the change. Gartner predicts that by 2023, over 75% of large enterprises will employ specialists in AI behavior forensics, privacy, and customer trust in order to reduce the risk associated with valuable brands and reputations.

Consolidation in the Data Science Ecosystem Rolls On

2019 was the year of intense M&A activity and consolidation in the industry. In June, Salesforce acquired Tableau, and Google scooped up Looker. Those were the biggest deals, but many other smaller consolidations occurred: Gengo acquired by Lionbridge, ClearStory Data acquired by Alteryx, Data Robot acquired 3 companies (ParallelM, Cursor, and Paxata), Appen acquired Figure Eight, Altair acquired Datawatch Corp., Cloudera acquired Arcadia Data, Sisense merged with Periscope Data, Logi Analytics acquired Zoomdata, Qlik acquired Attunity, MRI Software acquired Leverton, Idera acquired Wherescape, HPE acquired MapR, SymphonyAI acquired Ayasdi, just to mention a few.

Continuing into 2020, expect leading names in tech to leverage their assets by bringing further consolidation to the data science market. The appetite for third-party providers will grow. In part, driving this trend is the number of companies that are now seeing the AI-as-a-service market emerge. This will start playing a much larger role in how incumbents acquire the capabilities they need to leverage AI.

Python Moves to Number One Position

It’s been a slog over the past few years, with a lot of online flame wars along the way, but it’s pretty clear that Python has moved into the data science lead position as the language of choice for data scientists.

Stack Overflow’s annual developer survey (that included data scientists and machine learning specialists) released in April 2019 stated:

“Python, the fastest-growing major programming language, has risen in the ranks of programming languages in our survey yet again, edging out Java this year and standing as the second most loved language (behind Rust).”

It’s tough for me to admit this as I’ve been a strong supporter of R since my grad school days, but the writing is on the wall. For instance, just about all the research papers found on arXiv.org that include code on GitHub use Python. I believe this trend will continue into 2020. I myself use both R and Python on a regular basis for all my data science endeavors. Quid futurum sit!

AutoML

In January and again in April of 2019 I wrote articles touching on the upswing of interest in automated machine learning (AutoML) tools. At the time, I reported on 23 vendors offering such products. I kept adding to the list throughout 2019 and now I have nearly 40 tools. AutoML is a very popular technology class and I fully expect to see even more interest in 2020, coupled with more companies moving to evaluate and use the tools.

In general, the trend is for companies to invest heavily in building and buying AutoML tools and services. Apparently, there is a lot of motivation to make the data science process cheaper and easier. We’re seeing how this automation specifically caters to the needs of smaller and less technical businesses that can leverage these tools and services to have access to data science technology without building out their own team.

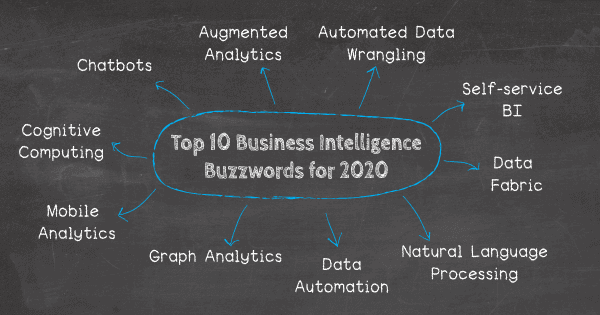

NLP

Natural Language Processing (NLP) provides a strong value proposition by giving business users a simpler way to ask questions about data and to receive an explanation of the insights. Conversational analytics advances the concept of NLP a big step forward by enabling such questions to be posed and answered verbally rather than through text. In 2020, NLP and conversational analytics will continue to boost analytics and BI adoption by significant numbers, including new classes of business users, particularly front-office workers.

A big driver of this trend is that NLP has made its way firmly into data science after giant breakthroughs in deep learning research and has fueled the full-on integration of NLP into routine data analytics. Check out this informative guide for a technical overview of the most important advancements in NLP over the past few years.

Cloud-Based Big Data Science

Cloud computing is a strong trend that’s taking the field of data science by storm. Cloud computing offers the ability for any data scientist from anywhere to access virtually limitless processing power and storage capacity. Computing power on demand is very attractive to data scientists who want the resources when they need them.

There is an increasing number of cloud options that data scientists can choose from: Amazon SageMaker, Google BigQuery ML, Google Dataproc, Microsoft Azure ML, IBM Cloud, NVIDIA GPU Cloud, and many other smaller tier providers like BigML. If you’re just getting started in the cloud, try out Google Colaboratory which is what I recommend to my students.

Everything from data to processing power is growing at a steady pace. As the field of data science continues to mature in 2020, we might eventually see the entire data science being conducted purely in the cloud due to the sheer volume of the data and the level of compute resources required to process the data.

Graph Databases

As big data continues to march forward, businesses are asking increasingly complex questions across blended data sets consisting of structured and unstructured information. Analyzing this level of data complexity at scale is not straightforward, or in many cases even possible using traditional database systems and query tools.

Unlike their relational brethren, graph databases show how entities such as people, places and things are related to one another. Applications of the technology range from anti-money laundering and fraud detection, to geospatial analysis, to supply chain analysis.

Gartner predicts that the application of graph databases will grow at 100% annually over the next few years to accelerate data preparation and enable more complex and adaptive data science. Two leaders of the graph database space are: Neo4j and Tigergraph.